The fact that some companies and Google’s artificial intelligence algorithms were discriminatory regarding race or gender shocked the entire tech community. How could this be?

Well, the tech giant has just launched Know Your Data, a tool that aims to put an end to bias in the data used to train artificial intelligence.

A real case of bias in machine learning

Let’s start with an example: we develop an algorithm that analyses hundreds of thousands of bank accounts to determine credit scores based on countless variables.

And we started the machine learning process with real and historical credit databases.

Before long, we realise that this algorithm keeps assigning lower credit scores to Afro-Americans or women.

That is, the data the AI is using to train is packed with socio-cultural biases, and so are the results of this AI.

And so, instead of scoring credits in a more objective and neutral way, AIs continue to perpetuate discrimination on the basis of ethnicity or gender.

This is a real case discovered in a study, but the problem has quickly grown to give birth to the concept of xIA or Explainable Artificial Intelligence.

This is a trend that aims to make the processes by which AIs make decisions more transparent and understandable.

And it is in the context of this problem that Google’s artificial intelligence comes into play, with its new Know Your Data tool.

How does Google’s Know Your Data tool work?

It all starts with data.

It’s the basis of much machine learning research and development, as they help structure what a machine learning algorithm learns and how models are evaluated and compared.

However, data collection and labelling can be tricky due to unconscious bias, data access limitations and privacy issues, among other challenges.

As a result, machine learning datasets can reflect unfair social biases in relation to race, gender, age, etc.

And Google’s artificial intelligence, like all other algorithms being developed and trained, is not free of this either.

Hence the need for a tool to help detect biases in the data that are undetectable at first glance.

Know Your Data (KYD) helps machine learning research, product and compliance teams understand sets KYD offers a number of features that allow users to explore and examine machine learning datasets: users can filter, group and study correlations based on annotations already present in a given dataset.

Source: https://ai.googleblog.com/2021/08/a-dataset-exploration-case-study-with.html

In addition, Google has developed functionality in this tool that presents labels automatically calculated from Google’s Cloud Vision API. This, so that users can easily find signals that were not originally present in the dataset.

How Know Your Data will help google’s artificial intelligence in practice

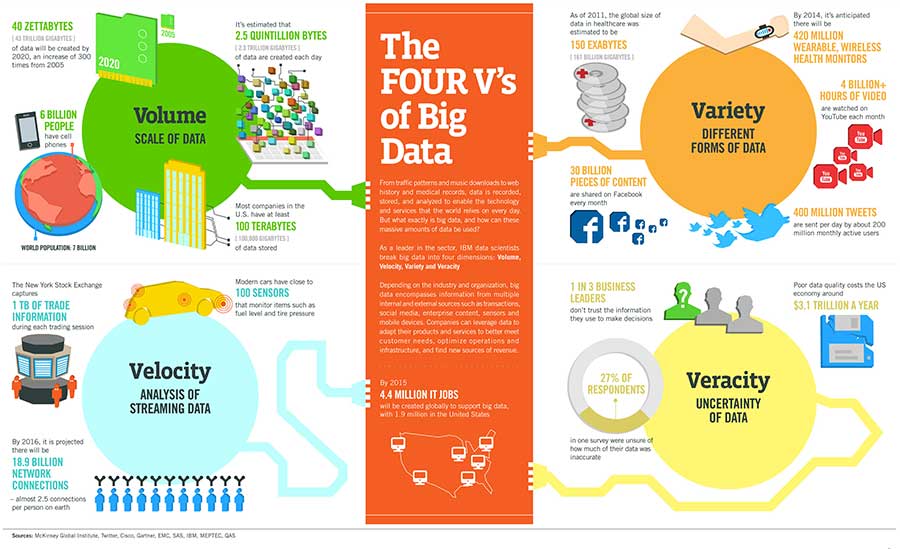

Google has shared a first use case of its tool detecting gender and age discrimination in the analysis of visual datasets.

Specifically, by analysing some 300,000 images of people performing activities and the annotations on them from the COCO Captions database, confirming the biases in the data.

To perform the analysis, the relationship between two different signals in a dataset is examined. I.e. how many times they tend to appear together compared to what would be expected by pure probability or chance.

For example, how many times the terms “young” and “running” or “old” and “running” are represented together.

Each cell indicates a positive (blue colour) or negative (orange colour) correlation between two specific signal values together with the strength of that correlation.

Among the biases detected, there are few images of women performing music, skateboarding, jumping or snowboarding compared to men. However, women are mostly represented in activities such as cooking or shopping.

And also associated with words like “pretty”, “attractive” and alike.

With Know Your Data, Google has also detected a representation bias in activities by age. Specifically, fewer people over the age of 65 are doing activities such as dancing, swimming or playing than younger people.

The tool is still in beta version but already offers users several dozen visual datasets and plans to continue adding new ones in the future.