Think for a moment about a machine that has the gift of sight, as if it had a pair of digital eyes. But with the ability not just to see but to understand, interpret and make decisions based on what it observes. This capability opens up possibilities in different areas such as robotics, security and entertainment.

It’s been a long time since machines could only understand zeros and ones. But thanks to computer vision, they can now analyse and understand the visual world around them. They can recognise faces in photos, distinguish objects and even evaluate patterns. Have you thought about how our mobile phones can do things like organise photos by location or suggest effects to improve our images? All of this is possible thanks to computer vision.

Well, in the field of computer vision, there are incredible tools that are making the impossible become reality. One of these gems is YOLOv8 Pose, a technology that represents a giant leap in real-time object detection and classification. Are you ready to explore how all this works in detail? Join me as I guide you step by step through the training of the YOLOv8 Pose estimation model using deep learning.

What is computer vision? + examples

Computer vision (CV) or machine vision are similar terms that refer to the field of study focused on developing algorithms and systems that allow machines to interpret and process visual information in the same way that humans do with eyes and brain.

Numerous leading companies have integrated computer vision into their daily operations, taking their technology to another level. Here are some examples where this technology is used innovatively:

CaixaBank

This top banking entity in Spain is using incredible technologies such as computer vision and the YOLOv8 Pose model for facial recognition in its branches. Now, customers can quickly identify themselves without having to search for their card or remember their PIN. How do they do it? Well, they’re employing a model called YOLOv8 Pose that basically analyses customers’ faces. This model was trained with loads of photos of human faces, and it’s already in more than 1,000 branches. People love it because it’s more convenient and secure than the old methods.

But wait, there’s more! CaixaBank is using YOLOv8 Pose to detect objects in the images of our transactions. This model has been trained with photos of credit and debit cards, as well as common objects, helping to reduce the number of fraud cases.

Facebook (facial recognition)

Facebook’s facial recognition system employs deep learning algorithms, which are a form of artificial intelligence, to analyse unique facial features and create digital representations called ‘feature vectors’. This allows for identifying a person in different photos, even if the pose or lighting changes.

Moreover, computer vision allows the application to transform your photos and videos into something beyond the ordinary with the use of filters and effects that identify the contours and features of your face. Then, it applies a transformation based on the selected filter. For example, if you choose an ageing filter, the application can simulate how facial features would look over time, based on characteristics it has detected. This allows for manipulating and understanding the image in real time thanks to technology and creativity.

Inditex

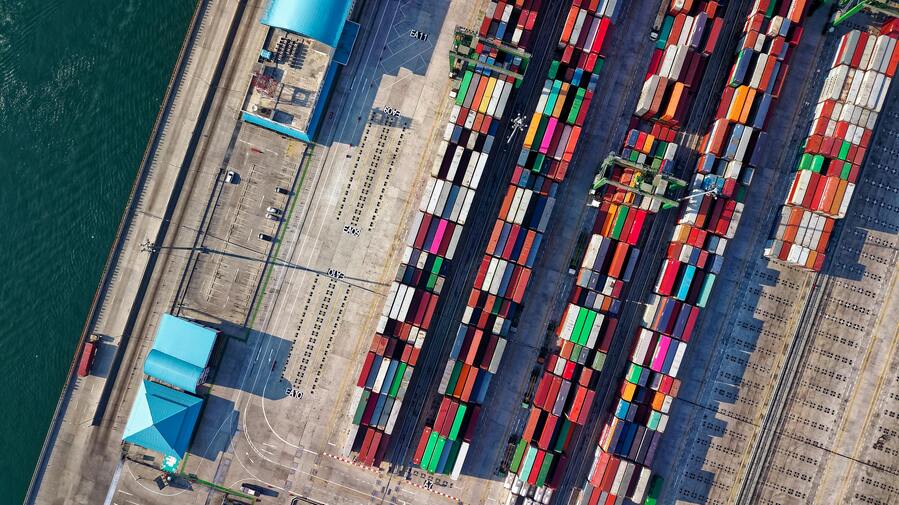

In the world of fashion, computer vision is being used to give a modern twist to manufacturing and logistics operations.

Imagine this: on Inditex’s production lines, they’re using computer vision to examine garments and detect any defects. This is thanks to the installation of cameras that capture images of the garments as they go down the production line. The computer vision software uses pose estimation to identify keypoints on the garments. These are used to compare the garments with Inditex’s quality standards. If the software detects a defect, the garment is taken off the line to be fixed or eliminated.

But they don’t stop at garment inspection. Inditex is also using computer vision to keep inventory levels in check. With cameras aimed at store shelves, the software uses pose estimation to identify products on those shelves.

Now, let’s talk about the field that simplifies the direction of keypoints: spatial compression.

Effortless pose estimation: Simplifying keypoint detection and spatial understanding.

In recent years, the field of computer vision has witnessed unprecedented advancements, owing much of its progress to the innovative capabilities of deep learning and artificial intelligence tools. Among the many tasks that have benefited from this revolution, pose estimation stands out as a fundamental and challenging problem that plays a crucial role in computer vision.

Prominent models for pose estimation

For a moment, visualise that computers can see the world with an expert eye for details. That’s what pose estimation does in the field of computer vision. This incredible task involves understanding not only what’s in an image, but also the exact position and orientation of each object or person. From improving athletes’ performance to giving robots the ability to move autonomously, pose estimation is at the heart of many technological innovations that surround us.

AlphaPose

This model stands out for its high accuracy in detecting keypoints in human poses, providing a detailed and exact representation of posture. Moreover, its ability to detect multiple people in a single image or video sequence makes it versatile in environments with several individuals.

Most notably, its real-time efficiency allows for the processing and analysis of video sequences with minimal delay, making it a tool for applications that require an almost real-time response.

OpenPose

It’s a super clever vision model that acts like a sort of digital magic eye that can see and track the keypoints on our body. To achieve all this, OpenPose combines a bit of machine learning with image processing.

Imagine that it can detect things like eyes, nose, fingers and even heels. And you know what’s best? This model is super powerful and can be used for loads of different things. It’s a great option for scientists and developers working with computer vision.

Detectron2

This model is a true gem in the world of pose estimation and computer vision. It’s like a toolbox that helps you identify and divide objects in images and videos. It’s incredibly useful in loads of computer vision tasks, such as identifying things in medical images or even ensuring that a factory’s production is going well.

In this article, we focus on Ultralytics’ YOLOv8 Pose model, which introduces an innovative approach to tackling pose estimation challenges through deep learning.

YOLOv8 Pose

Have you heard of YOLOv8 Pose? It’s like a showbiz star in the world of computer vision, and it comes straight from Ultralytics. This object detection model is like the super athlete of computer vision, it’s fast and accurate like no other.

And the best part is that it’s an improved version, practically an upgrade in everything as they’ve given it a more powerful neural network, a better training method that makes the model even more accurate, and even a new API that makes it easier to use and adjust to what you need.

YOLOv8Pose: A new era in pose estimation

Now we’ll tell you about the special features it has for detecting things in images quickly and accurately:

First, its brain. YOLOv8 Pose has a way of thinking, a neural network architecture that’s smarter and more precise than its previous version. Then, it trains in a super effective way, as if it were in a gym for computer vision models. It starts with basic knowledge and then adjusts specifically to become an expert in detecting things in a particular type of image.

Finally, its ability to communicate. It has a way of speaking, an API that’s like an easier and more flexible language for developers. It gives them loads of tools to make object detection applications.

Pose estimation with ultralytics framework

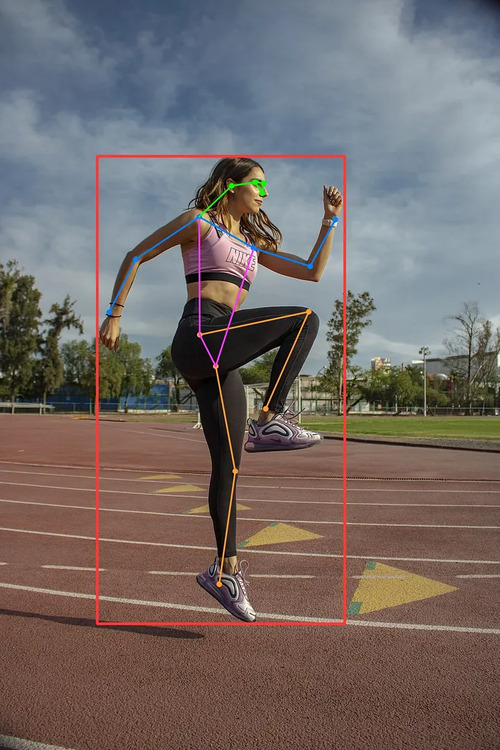

Pose estimation is like looking for special points, called keypoints, in a photo. These points are like the important parts of an image, such as joints or unique features. And the interesting thing is that we can say where they are using coordinates, either in 2D [x, y] like in a drawing, or in 3D [x, y, visible], where ‘visible’ tells us if the point is detectable. This is how the computer learns to read the image.

The job of a pose estimation model is to predict where certain keypoints are located on objects in an image and also tell us how confident the machine is about those keypoints. This type of estimation is used when we need to identify very precisely the parts of an object and how they are positioned in relation to each other.

Ultralytics YOLOv8 pose models

The Ultralytics YOLOv8 Framework has a specialisation in pose estimation. It’s like having a dance expert who can identify a person’s key movements. With its pose estimation models, marked with the -pose suffix (for example, yolov8n-pose.pt). Remember the COCO keypoints dataset? It’s like the most famous test bank for evaluating how good a model is at pose estimation. Ultralytics did an incredible job documenting everything very thoroughly. The code snippets we’re sharing with you here come directly from their resources, which are excellently detailed. So you have in your hands a solid and reliable foundation for your computer vision projects.

Pre-trained Pose Models

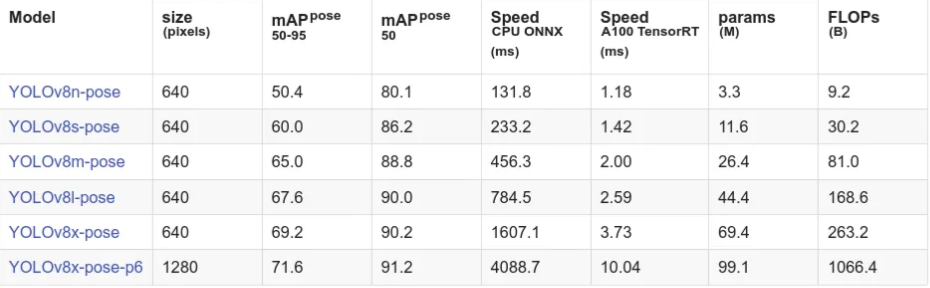

Ultralytics makes available pre-trained YOLOv8 Pose models with a variety of sizes and abilities. These models have been prepared with the COCO keypoints dataset and are ready to be used in your specific pose estimation computer vision projects. Below, you’ll find the details of some of the available models:

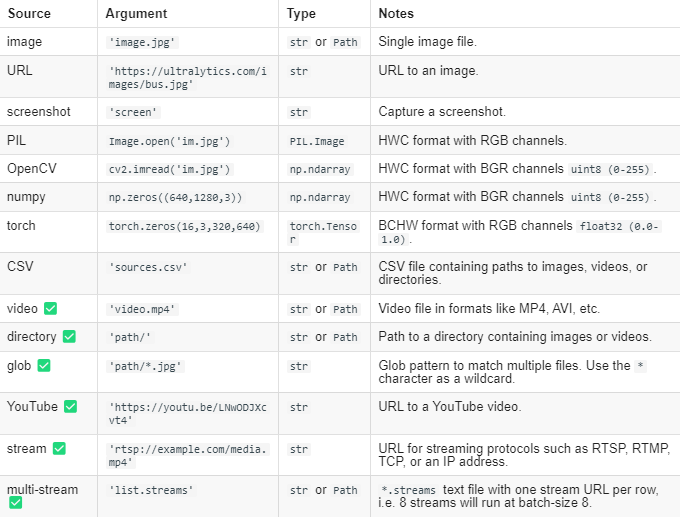

Take advantage of the easy-to-use YOLOv8 Pose

Using a pre-trained YOLOv8 Pose model to predict keypoints is a piece of cake. All you need to do is give an image to the model and it’ll do the rest. Now I’m going to show you how to do it, it’s simpler than you think!

Python

From ultralytics import YOLO

# Load a model

model = YOLO('yolov8n-pose.pt') # Load an official model

model = YOLO('path/to/best.pt') # Load a custom model

# Predict with the model

results = modelo('https://ultralytics.com/images/bus.jpg') # Predict on an imageCLI

yolo pose predict model=yolov8n-pose.pt source='https://ultralytics.com/images/bus.jpg' # predict with official model

yolo pose predict model=path/to/best.pt source='https://ultralytics.com/images/bus.jpg' # predict with custom modelThere are plenty of resources available for making predictions.

Results

Step by step: How to train your YOLOv8 Pose model

Training your pose model with YOLOv8 Pose using the Ultralytics framework is a straightforward task. This framework provides training options through Python and command-line interface (CLI) commands. You can either start from scratch or fine-tune a pre-trained model to suit your specific needs in the field of computer vision. Additionally, you have access to code examples to guide your training process, whether you prefer working in Python or via the CLI.

Python

from ultralytics import YOLO

# Load a model

model = YOLO('yolov8n-pose.yaml') # build a new model from YAML

model = YOLO('yolov8n-pose.pt') # load a pretrained model (recommended for training)

model = YOLO('yolov8n-pose.yaml').load('yolov8n-pose.pt') # build from YAML and transfer weights

# Train the model

results = model.train(data='coco8-pose.yaml', epochs=100, imgsz=640)CLI

# Build a new model from YAML and start training from scratch

yolo pose train data=coco8-pose.yaml model=yolov8n-pose.yaml epochs=100 imgsz=640

# Start training from a pretrained *.pt model

yolo pose train data=coco8-pose.yaml model=yolov8n-pose.pt epochs=100 imgsz=640

# Build a new model from YAML, transfer pretrained weights to it and start training

yolo pose train data=coco8-pose.yaml model=yolov8n-pose.yaml pretrained=yolov8n-pose.pt epochs=100 imgsz=640Verify and make predictions with your model

Once your YOLOv8 Pose model is read whether you’ve trained it yourself or are using a pre-trained one it’s time to put it to the test. You can assess its accuracy using a validation dataset to evaluate its performance. Additionally, the framework allows you to make predictions on images, enabling you to see your model’s capabilities in action.

Python

from ultralytics import YOLO

# Load a model

model = YOLO('yolov8n-pose.pt') # load an official model

model = YOLO('path/to/best.pt') # load a custom model

# Validate the model

metrics = model.val() # no arguments needed, dataset and settings remembered

metrics.box.map # map50-95

metrics.box.map50 # map50

metrics.box.map75 # map75

metrics.box.maps # a list contains map50-95 of each categoryCLI

yolo pose val model=yolov8n-pose.pt # val official model

yolo pose val model=path/to/best.pt # val custom modelExporting the model

Once you have trained your pose estimation model and are satisfied with the results, it’s time to take it further. Exporting your model to formats like ONNX or CoreML is a strategic move in computer vision, ensuring seamless integration across various applications and platforms, enhancing both accessibility and versatility.

Whether deploying it on edge devices, integrating it into a mobile app, or making it part of a broader process, exporting unlocks the full potential of your pose estimation solution across a wide range of scenarios. So, bridge the gap between development and real-world application, and let your pose estimation efforts truly shine!

With the Ultralytics YOLOv8 Pose framework, exporting your model is incredibly simple. You can export it in formats like ONNX, CoreML, TensorFlow Lite, and many more! By default, these models are licensed under AGPL-3.0. However, if you need to use them for research and development or in commercial projects and services while maintaining full control over your work, an enterprise licence is also available.

Python

from ultralytics import YOLO

# Load a model

model = YOLO('yolov8n-pose.pt') # load an official model

model = YOLO('path/to/best.pt') # load a custom trained

# Export the model

model.export(format='onnx')

CLI

yolo export model=yolov8n-pose.pt format=onnx # export official model

yolo export model=path/to/best.pt format=onnx # export custom trained modelFinal reflection

In an increasingly visual society, computer vision is emerging as a precise and accessible technology that will soon be present in applications beyond our imagination. One of the most exciting aspects is the development of machine learning models that are true marvels. These models can learn from vast amounts of data, enabling them to generate results that are far more accurate than their predecessors.

However, this is just the beginning. YOLOv8 Pose is undoubtedly a gem in the world of computer vision. In the coming years, we expect this model to be deployed across an even broader range of applications. Imagine a world where it enhances transport safety, automates industrial tasks, and improves medical diagnostics—all thanks to YOLOv8 Pose.

We invite you to test, verify, predict, and analyse the results of the YOLOv8 Pose models available to you. So, talk to our team and step into a world of possibilities where computer vision is just the beginning.