Imagine a painter who doesn’t need a canvas but can create picturesque landscapes and bring mythical creatures to life using only words. Generative AI for text-to-image does exactly that, unlocking new dimensions of collaboration between humans and machines.

In this article, we will explore the state of the art in this rapidly evolving field, diving into pioneering models like MidJourney and Stable Diffusion while uncovering a spectrum of innovative approaches that are redefining how we perceive and create visual content.

But first, we will explore generative AI, how it works, its main architectures, and some key examples. Let’s get started!

Generative AI: A look behind the scenes

Generative AI is a unique branch of artificial intelligence focused on creating new and original content. Unlike other forms of AI that primarily recognise or classify data, this technology has the ability to generate entirely new material.

How does it achieve this? Generative AI models are trained on vast datasets, enabling them to learn the relationships between elements that make up different types of content.

For example, if you ask a generative AI to imagine a sunset landscape, it analyses your description and, based on the data and patterns it has learned about sunsets, creates an image that matches your request.

It’s almost as if it has its own imagination, with the ability to interpret and bring ideas to life.

Main architectures in Generative AI

There are several architectures in the field of generative artificial intelligence, each with its own advantages and specific applications. Below, we will explore the most notable ones:

Generative Adversarial Networks (GANs)

GANs are one of the most popular architectures in generative AI. They consist of two neural networks—the generator and the discriminator—which are trained adversarially.

Imagine you have two teammates: one is the generator, and the other is the discriminator. The generator’s role is to create things that closely resemble reality, while the discriminator’s job is to distinguish between real data and the generator’s creations.

This competitive process continuously pushes the network to improve its generative capabilities.

Recurrent Neural Networks (RNNs) and Long Short-Term Memory (LSTM)

These architectures are key in generative AI, designed to work with sequential data such as text and audio.

RNNs have a special ability to remember information from earlier stages in a sequence, enabling them to understand data contexts that follow an order.

LSTMs, on the other hand, are enhanced versions of RNNs. They can capture long-term dependencies in sequences, recognising important details that span over time.

Transformers

Transformer models excel in natural language processing and text generation.

What makes them interesting is their attention mechanism, which allows them to process vast amounts of information in real time.

A well-known example of this model is ChatGPT.

Variational autoencoders (VAEs)

VAEs are probabilistic models that learn latent representations of data.

They consist of two key components: an encoder and a decoder. The encoder takes the input and transforms it into a hidden representation. Then, the decoder reconstructs the original input from this hidden representation.

Autoregressive Models

Autoregressive models in generative AI build on previous outputs—imagine you are drawing, and each new stroke is based on the previous one.

These models generate data one step at a time, with each new element constructed upon the last. They are particularly effective for predicting sequences.

Successful Applications

Generative AI has had a major impact across various fields. Here are some key examples:

- Art and creativity: These models can generate visual art based on textual descriptions.

- Design and fashion: AI is being used to create unique and innovative fashion designs.

- Film and entertainment: In the film industry, AI helps produce stunning visual effects and realistic scenes.

- Music and composition: Some models can generate original music by learning patterns and musical styles.

- Medicine and healthcare: AI is used to generate detailed medical images and simulate tissues for educational and training purposes.

- Advertising and marketing: The ability to generate visually appealing, personalised content is crucial in advertising. Generative AI can create images and adverts tailored to specific audiences.

Now, let’s explore the models that are making a mark in text-to-image generative AI in more detail.

Most used generative AI models

MidJourney

MidJourney is a remarkable example of text-to-image generative AI, combining a transformer-based architecture with generative adversarial networks (GANs). This fusion enables the model to craft intricate images from textual prompts, capturing nuanced details with astonishing realism.

By integrating textual and visual cues, MidJourney creates vivid landscapes, paints dreamlike scenarios with exceptional precision, and enhances the potential of AI-assisted artistic creation.

CLIP-Guided Generation

CLIP-guided generation harnesses the synergy between vision and language, leveraging the contrastive image text approach characteristic of CLIP in the field of generative AI. This model generates images aligned with textual descriptions by optimising image similarity scores according to the CLIP model.

The result is a striking fusion of language and visual elements, marking the beginning of a new era in image synthesis one filled with coherence and meaning.

Stable Diffusion

Stable Diffusion takes a different approach in the text-to-image generative AI landscape by focusing on stability and fine grained control over image generation.

This model employs a diffusion based technique to iteratively refine a noise signal, progressively shaping it into a coherent image. This allows users to fine-tune visual characteristics directly from text.

Stable Diffusion’s unique approach produces diverse and consistent images, making it a powerful tool for applications ranging from design prototyping to narrative illustration.

DALL·E

This model generates images from textual prompts, pushing the boundaries of creativity by producing visuals of unconventional concepts. From “a two-story pink house shaped like a shoe” to “a cube made of the night sky,” DALL·E showcases the potential of generative AI to bring surreal ideas to life. This undoubtedly fuels discussions on the intersection of creativity and artificial intelligence.

Does Kandinsky-2 Surpass MidJourney? An Innovative Open-Source Model Transforms Text into Images

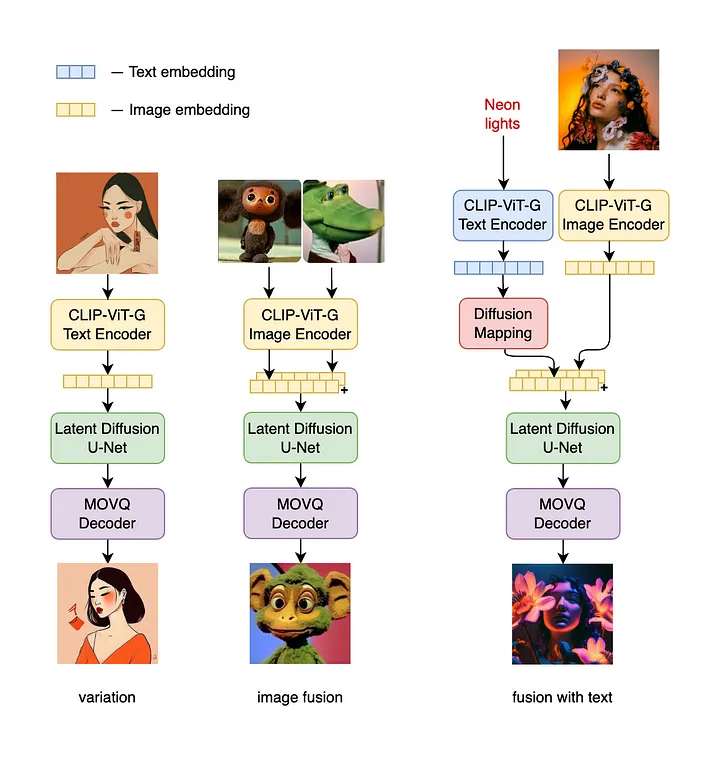

Imagine Kandinsky-2 as an ultra-advanced digital artist. Now, with the release of Kandinsky 2.2, there’s been an incredible leap from version 2.1. This update introduces an enhanced image encoder, CLIP-ViT-G, which significantly improves the quality of generated images. Additionally, the innovative integration of ControlNet offers greater support and precision in image generation.

The adoption of CLIP-ViT-G as an image encoder marks a major advancement in the model’s ability to create visually appealing and text-aligned images, enhancing overall performance. Meanwhile, the inclusion of ControlNet allows for precise control over the image generation process. This results in more accurate and aesthetically refined outputs, unlocking new possibilities for text-guided image manipulation in the field of generative AI.

The Architecture Behind Kandinsky-2

Below are the key components of this model’s architecture and the process it follows to generate unique and captivating content:

- Text Encoder (XLM-Roberta-Large-Vit-L-14) – 560M

- Previous Diffusion Image Model – 1B

- CLIP Image Encoder (ViT-bigG-14-laion2B-39B-b160k) – 1.8B

- Latent Diffusion U-Net – 1.22B

- MoVQ Encoder/Decoder – 67M

Kandinsky-2 Checkpoints

These checkpoints represent the different capabilities and approaches of diffusion models in various image-processing tasks within the field of generative AI.

- Previous Model: A prior diffusion model that maps text embeddings to image embeddings.

- Text-to-Image / Image-to-Image: A decoding diffusion model that maps image embeddings to images.

- Inpainting: A decoding diffusion model that maps image embeddings and masked images to complete images.

- ControlNet-Depth: A decoding diffusion model that combines image representations with additional depth information to generate detailed images.

Optimisation for Use

It is important to note that Kandinsky models, due to their complex architecture and large number of parameters, require substantial computational resources to function effectively in generative AI.

If you are considering using these models for text-to-image generation or manipulation tasks, it is essential to evaluate the available hardware specifications.

For instance, running these models on hardware with limited resources, such as my RTX 2060 with 8GB of VRAM, may encounter challenges due to memory constraints. The extensive model architecture and processing demands can lead to out-of-memory errors or performance bottlenecks in such hardware configurations.

What you’ll need to install it

Let’s get started! First, we need to ensure we have everything required to install Kandinsky-2. I’ll guide you through the key elements you’ll need.

!pip install 'git+https://github.com/ai-forever/Kandinsky-2.git'

!pip install git+https://github.com/openai/CLIP.gitRunning the model with a few lines of code

from kandinsky2 import get_kandinsky2

model = get_kandinsky2(

'cuda',

task_type='text2img',

model_version='2.1',

use_flash_attention=False

)

image_cat = model.generate_text2img(

"red cat, 4K photo",

num_steps=100,

batch_size=1,

guidance_scale=4,

h=768, w=768,

sampler='p_sampler',

prior_cf_scale=4,

prior_steps="5"

)[0]Different model versions can be selected: 2.0, 2.1, 2.2.

Advantage over other models

One notable advantage that sets the Kandinsky models apart from other text-to-image generative AI models is their ability to seamlessly merge the power of textual descriptions with a combination of two images.

This unique feature opens new doors for creative exploration, allowing us to generate images that emerge from a fusion of different visual elements.

Unlike traditional text-to-image generative AI models, which rely solely on textual prompts to generate images, Kandinsky models introduce a novel approach by enabling users to provide not only textual descriptions but also a combination of two images.

This capability transcends the limits of conventional image synthesis, resulting in innovative outputs that showcase an intriguing blend of diverse visual content.

Using image fusion

Let’s generate another image, such as a tiger:

image_tiger = model.generate_text2img(

"tiger",

num_steps=100,

batch_size=1,

guidance_scale=4,

h=768,

w=768,

sampler='p_sampler',

prior_cf_scale=4,

prior_steps="5"

)[0]

And now, let’s combine them using the mix_image model 🐈 + 🐅 = ❓:

image_mixed = model.mix_images(

[image_cat, image_tiger], [0.5, 0.5],

num_steps=100,

batch_size=1,

guidance_scale=4,

h=768, w=768,

sampler='p_sampler',

prior_cf_scale=4,

prior_steps="5",

)[0]

We have created a rare and magnificent new animal—truly remarkable, isn’t it?

Authors

This article extends its gratitude and recognition to the esteemed authors who have led the advancements discussed here. Thanks to their dedication and relentless efforts, the field of generative AI has witnessed extraordinary progress in recent years.

- Arseniy Shakhmatov: GitHub | Blog

- Anton Razzhigaev: Github , Blog

- Aleksandr Nikolich: Github , Blog

- Vladimir Arkhipkin: Github

- Ígor Pavlov: Github

- Andréi Kuznetsov: Github

- Denis Dimitrov: Github

Final thoughts

In the captivating realm where AI converges with art, generative text-to-image AI stands as a testament to the boundless potential of human-machine collaboration.

This article has shed light on the extraordinary journey undertaken by innovators and developers who have paved the way for this fascinating intersection. As we conclude, we find ourselves immersed in the remarkable landscape of Kandinsky 2.2, a model that surpasses its predecessors with a suite of groundbreaking features and capabilities.

The remarkable ascent of Kandinsky 2.2 represents a fusion of precision and imagination, redefining text-to-image generation. The integration of the CLIP-ViT-G image encoder heralds a new era of enhanced aesthetics and superior alignment with textual input, demonstrating an evolution that enriches the model’s creative potential.

Moreover, the introduction of the ControlNet mechanism further elevates the experience, empowering creators to sculpt nuanced, text-guided imagery a testament to the power of AI-driven artistic expression.