Big Data vs Data Warehouse

In this article you will discover how to implement modern data warehousing solutions in the cloud, going through all the layers, from the ELT processes that perform data intake at source in a data lake, through different states of data quality and governance, to end up in a corporate data warehouse optimized for large volumes of data.

For years, the world of data warehousing has been adapting to the needs that were emerging using different strategies such as vertical scalability of servers, partitioning or the evolution of data models (star, snowflake, data vault …), all to be able to cope with the different scenarios that the growing volume of data was proposing. However, the explosion of big data and cloud technologies has allowed us to evolve beyond simply adding more capacity to traditional systems.

Data Warehouse Architecture in the Cloud

Faced with this situation, today we find solutions adapted to build a modern datawarehouse with total guarantee of success. In order to achieve such success we have to move away from the improvements at the level of data structure in the model itself (which is still very important) and propose changes directly in the architecture of data processing and storage.

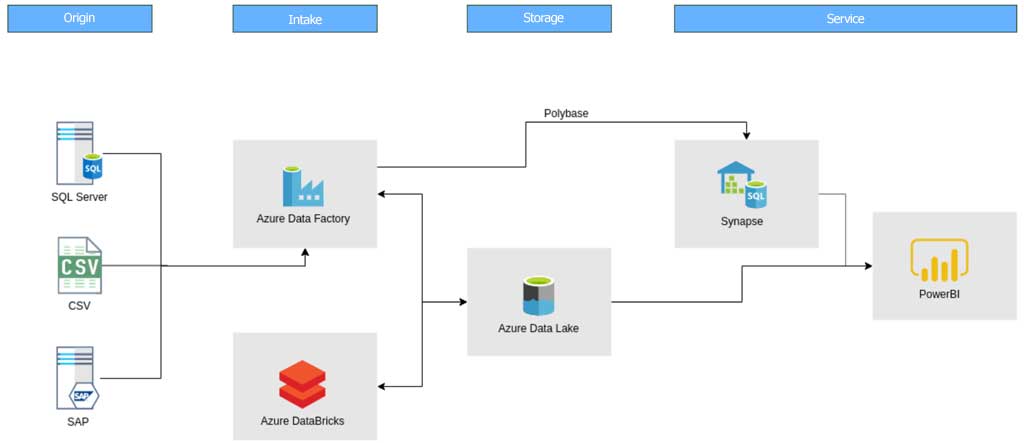

The parts involved in this new data processing and storage architecture for data warehouses are as follows:

Source layer: this layer always contains pieces “external” to the model, since its heterogeneity makes it possible to insert different pieces of different types at this point, such as, in addition to those shown in the image, logs, photos, different databases (sql data warehouse, postgres, Elastic, Mongo, Casandra…), xmls, etc.

- Intake and processing layer: the power that spark offers us for the massive parallel processing becomes essential in this layer, since the volume of data to be treated is possibly very high. This layer interacts with the next one in a cyclical way to be able to process the data, modeling them and providing them with quality.

- Storage layer: the use of a file system like DataLake in this layer will provide us with several advantages (we will go into this point in more detail later).

- Service layer: in this layer it is crucial to have a database optimized for data warehouse that allows us to store the data partitioned and distributed in different nodes to process queries, using parallelism and that the response speed is the minimum possible. In addition, we must also have some tool that allows us to exploit the DWH data to create reports and dashboards.

The Data Lake importance

As we mentioned before, we are going to talk about the importance of building a good Data Lake as a data repository. In a classic DWH architecture, there were different layers within the database itself that allowed us to store data without adapting it to the specific model for DWH, such as the ODS (Operational Data Store) or Staging layers. Such layers, in a modern DWH are replaced by the Data Lake, which has several advantages:

- As it is a file system, it is faster to access its information, since parallel processing tools can be used.

- The use of it as a data repository, thus having a single centralized point where all the company’s data is located.

- Derived from the previous point, another advantage is that it makes a significant change in the intake of files according to the “classic” form, where the processes followed an ETL structure (Extract-Transform-Load). However, now thanks to the use of the Data Lake as a data repository, the ELT (Extract-Load-Transform) form is used since it is possible to leave the files without formatting or normalizing them in the Data Lake and then perform transformations on them, thus overloading the source systems as little as possible.

- To be able to give access to this repository to different applications or company personnel (data scientists) in order to exploit such data.

- As it is a file system, the cost of storage is also cheaper than in a database.

- It allows us to create levels of organization of the data according to its quality and maturity.

Let’s focus on this last point, since being able to structure different levels of data maturity will allow us to better ensure that the information that will reach the end user is reliable.

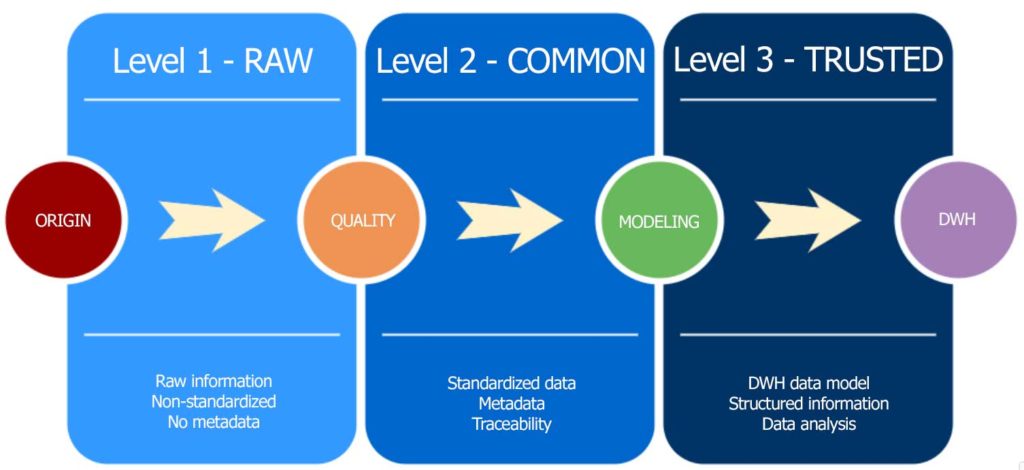

In this case, we have identified three different levels of maturity that follow the following criteria:

- Level 1 – Raw: this first level will store the raw data as it comes in its original systems.

- Level 2 – Common: the second level is where the data quality and data governance rules to be defined will be applied to the data, in order to obtain reliable sources of information.

- Level 3 – Trusted: in the last level will be the data already modelled, with the past quality rules (avoiding null or badly informed values, for example), which will allow to access them in an easy and structured way to make analysis of them

Due to the implementation of these layers, the architecture requires, as we mentioned before, a cyclical interaction between this storage layer in Data Lake and the processing layer with Spark.

Conclusions

We have reviewed in broad terms the changes and evolutions that the world of the Data Warehouse has had to undergo in order to adapt to the particularities that Big Data has brought to the world of technology. Thanks to these adaptations we can now build a DWH with the certainty that it will be reliable, safe and fast, a guarantee of success.

* Note 1. For this article we have simplified the architecture to the most basic possible. We could increase the complexity of it by adding more tools for real-time event handling, e.g., data governance tools, tools for making OLAP cubes in the service layer, etc.

* Note 2. As you can see, we have used Azure’s tools for this specific example, but each cloud has specific tools that could be replaced by Azure’s tools to obtain the same results. It could even be done with on-premise tools.

[/et_pb_text][/et_pb_column][/et_pb_row][et_pb_row _builder_version=”4.14.2″ _module_preset=”default” global_colors_info=”{}”][et_pb_column type=”4_4″ _builder_version=”4.14.2″ _module_preset=”default” global_colors_info=”{}”][et_pb_image src=”https://opensistemas.com/wp-content/uploads/2022/02/os_en_ebook_Intelligen-Data-Lake-980×315-1.jpeg” title_text=”ebook_Intelligen-Data-Laket” url=”https://opensistemas.com/en/intelligent-data-lake-data-based-strategic-decisions/” url_new_window=”on” _builder_version=”4.14.2″ _module_preset=”default” global_colors_info=”{}”][/et_pb_image][/et_pb_column][/et_pb_row][/et_pb_section]